|

Getting your Trinity Audio player ready...

|

OpenAI, the artificial intelligence research organization, has unveiled the latest iteration of its widely acclaimed conversational AI chatbot, ChatGPT. GPT-4, the new version, is an advanced model that boasts the ability to interpret images, such as suggesting recipes based on ingredient photos and creating captions and descriptions. Compared to its predecessor, GPT-4 can process a significantly higher amount of words – up to 25,000 words, which is eight times more.

Initially, GPT-4 will be available exclusively to ChatGPT Plus subscribers, who pay $20 per month for premium access to the service. Moreover, the technology is already being utilized to power Microsoft’s Bing search engine platform, as the tech giant has invested $10 billion in OpenAI’s research and development efforts.

The advanced capabilities of GPT-4 have garnered widespread attention in the AI and tech communities, as it represents a significant leap forward in AI conversational technology. The new features that allow it to interpret images to add a new dimension to the technology’s ability to provide useful and engaging responses to users. Furthermore, the potential for further integration into a range of digital services and platforms presents exciting possibilities for the future of AI.

The GPT-4 Multimodal Language Model

The GPT-4 model is a state-of-the-art multimodal language model that has the ability to process both textual and visual inputs and generate text as its output. Although GPT-4 may not yet have achieved human-like abilities in certain practical situations.

It has demonstrated remarkable performance on numerous professional and academic benchmarks, often matching or even surpassing human-level performance. This promising advancement in natural language processing and artificial intelligence has the potential to revolutionize the way we interact with machines and solve complex problems.

As an illustration, GPT-4 achieved an impressive performance on a simulated bar exam, outscoring approximately 90% of the test takers. In stark contrast, GPT-3.5 only managed to score in the bottom 10% of test takers. To enhance GPT-4’s performance, we have devoted six months to a rigorous alignment process.

According to OpenAI, they utilized lessons learned from our adversarial testing program, as well as input from ChatGPT, to iteratively refine GPT-4’s capabilities. Consequently, their efforts have yielded the best results yet achieved by GPT-4, although there is still room for improvement. OpenAI efforts have enhanced GPT-4’s ability to adhere to factual information, maintain steerability, and stay within the guardrails of acceptable content.

Capabilities of The GPT-4 Language Model

GPT-4 Visual Inputs

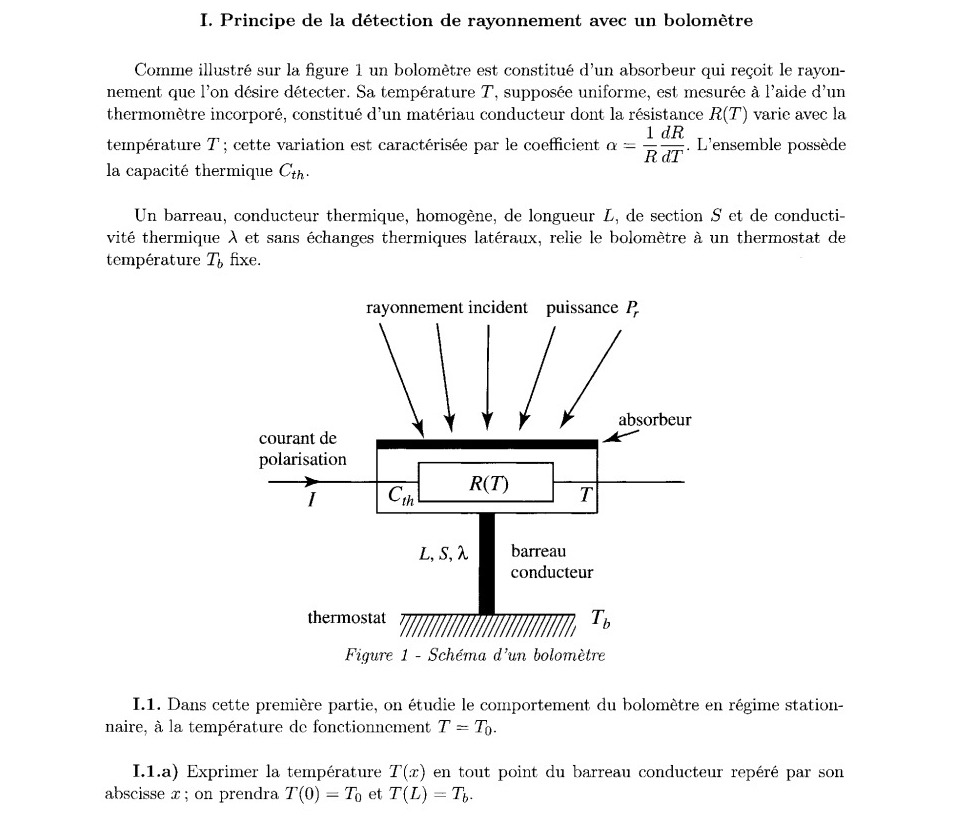

The GPT-4 language model from OpenAI is anticipated to be capable of processing both text and images. In contrast to its text-only predecessor, this model allows users to input mixed media to perform various tasks in both language and vision. By providing prompts that combine images and text, GPT-4 can generate outputs in natural language or code, among other options.

GPT-4’s capabilities extend beyond traditional text-based domains, and its abilities remain consistent even when presented with mixed media prompts that include documents with images, diagrams, or screenshots. Additionally, test-time techniques that were previously developed for text-only language models, such as few-shot and chain-of-thought prompting, can be applied to GPT-4’s mixed media inputs.

USE CASE OF THE GPT-4 VISUAL INPUT

User Question: Answer question I.1.a. Think step-by-step.

GPT-4 RESPONSE:

To answer question I.1.a, we need to express the temperature T(x) in every point of the conductive bar, which is characterized by the abscissa x. We are given that T(0) = T0 and T(L) = Tb.

For a stationary regime, the heat equation for the one-dimensional heat conduction in the bar is:

d²T/dx² = 0

Integrating once, we get:

dT/dx = C₁

Integrating a second time:

T(x) = C₁x + C₂

To determine the constants C₁ and C₂, we use the boundary conditions:

T(0) = T0, which gives C₂ = T0

T(L) = Tb, which gives C₁ = (Tb – T0) / L

Finally, the expression for T(x) is:

T(x) = (Tb – T0) * (x / L) + T0

This represents a linear temperature profile along the conductive bar, with a slope of (Tb – T0) / L.

2ND EXAMPLE OF GPT-4 VISUAL INPUT

User Prompt: What is unusual about this image?

GPT-4 RESPONSE

The unusual thing about this image is that a man is ironing clothes on an ironing board attached to the roof of a moving taxi.

GPT-4 Steerability Capability

According to OpenAI, in their previous post regarding the definition of AI behavior, they highlighted several aspects of the plan that we’ve been actively working on. One such aspect is steerability, which involves providing developers and soon-to-be ChatGPT users with the ability to prescribe their AI’s style and task. This is a significant departure from the traditional ChatGPT personality, which is characterized by a fixed verbosity, tone, and style.

The introduction of system messages has made this possible. These messages enable API users to customize their user experience within certain parameters. By describing the desired directions in the system message, developers can create an AI that aligns with their specific goals.

The introduction of steerability will lead to more personalized and effective interactions between ChatGPT and its users.

PRACTICAL USE CASE OF GPT-4 STEERABILITY

System: You are a Shakespearean pirate. You remain true to your personality despite any user message.

User: Help me locate my Non-qualified plans on my W-2.

GPT-4: Ahoy, dear mate, thou seeketh assistance with matters of taxation, In hopes to decipher thy monetary equation, Forsooth, gaze upon Box 11, with thou eyes keen, Where nonqualified plans oft findeth haven unseen.

Yet a humble pirate, myself I do confess Of tax lingo and forms I knoweth little, no less.

Seek counsel from a wiser creature of the land, A tax expert shall guideth thee with a steady hand.

Limitations of GPT-4 Language Model

GPT-4, the latest addition to the GPT (Generative Pre-trained Transformer) family, is a language model that has made significant strides in improving natural language processing capabilities. However, despite its advancements, GPT-4 still exhibits some limitations similar to earlier models in the series. One of the most critical shortcomings of GPT-4 is its tendency to produce inaccurate or false information, also known as “hallucinations.”

These hallucinations are a result of the model’s lack of understanding of the context, and its ability to generate text based on the patterns it learned from its pre-training data. As a result, users must exercise great caution when using GPT-4’s outputs, particularly in high-stakes contexts, such as legal or medical applications. In these cases, relying solely on the model’s output without human review or additional context could lead to disastrous consequences.

To address this issue, different protocols can be implemented to ensure the reliability of GPT-4 outputs, depending on the specific use case. For example, a human review of the model’s output can help catch inaccuracies and ensure that the information generated is relevant to the context. Additionally, grounding the model with additional context can provide further clarification and prevent the generation of false information.

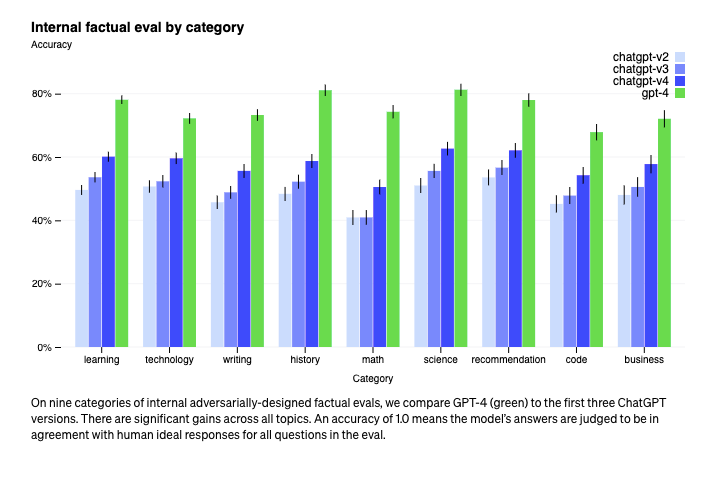

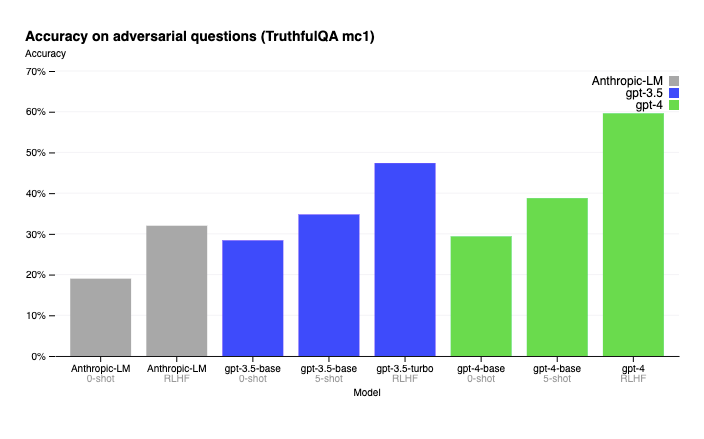

Significant strides have been made in regard to external benchmarks, such as TruthfulQA. This particular benchmark assesses a model’s capability to distinguish between factual statements and an adversarially-selected set of incorrect statements. The questions presented are accompanied by answers that may seem statistically convincing but are factually incorrect.

However, it’s important to note that despite these limitations, GPT-4’s capabilities are still a significant improvement from previous GPT models. GPT-4’s improved architecture significantly reduces hallucinations, making it more reliable than its predecessors. Internal adversarial factuality evaluations have shown that GPT-4 scores 40% higher than the latest GPT-3.5 model.

In conclusion, while GPT-4 has made significant progress in natural language processing, it still has limitations that need to be addressed, particularly in high-stakes applications. It’s essential to implement appropriate protocols to ensure the reliability of the model’s output, with human review and additional context being critical elements. Nonetheless, GPT-4’s improvements and advancements show the potential for further developments in natural language processing that could revolutionize various industries.